Chinese Academy of Sciences

2019-2024

Visualization design UX design

Data analysis Visualization development

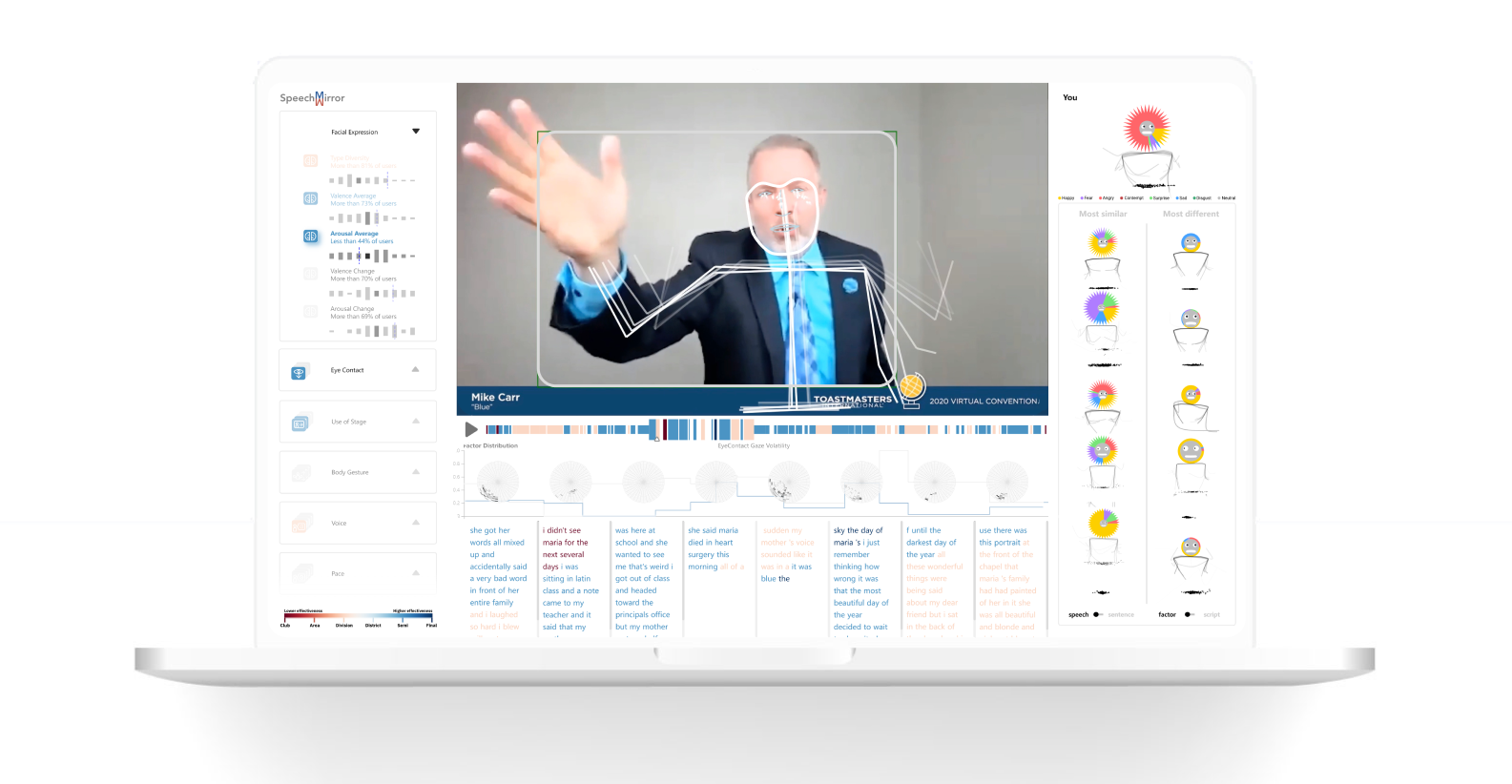

The first large scale analytic platform for exploring speech effectiveness.

Over four years of collaboration with researchers, three different visual analytic systems were co-created.

Chinese Academy of Sciences

2019-2024

Viz design UX design

Data analysis Viz development Usability analysis

At the start of the project small scale analysis showed patterns between the emotion of a speaker and contest ranking, inspiring further research.

The founder of Infodesign Studio began to collect contest speeches, wondering if large scale analysis can bring insights. The speeches were processed using affective computing methods.

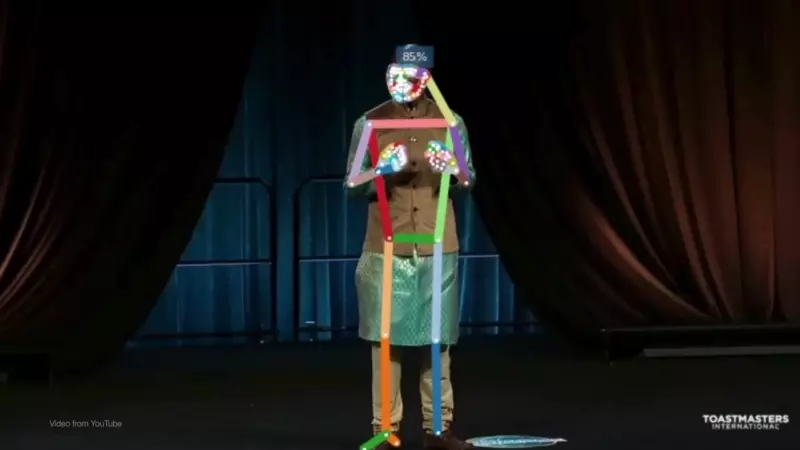

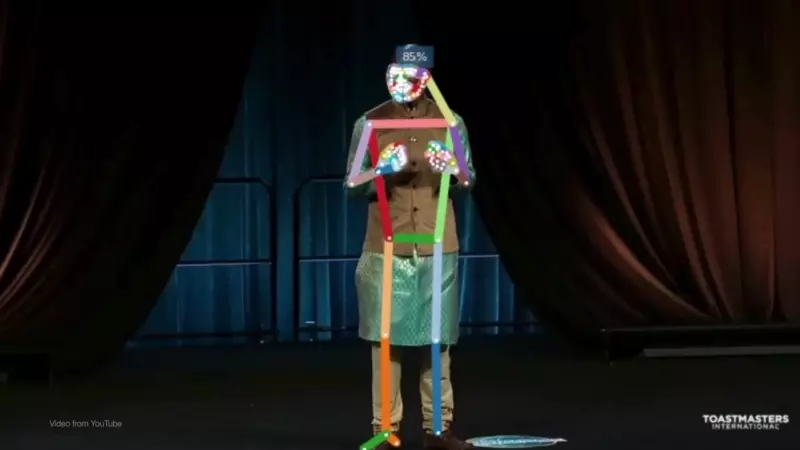

The first system allows speakers to test theories about what factors go into speech effectiveness. Infodesign Studio worked on creating analysis metrics, creating the requirements of the system, visualizations, and the design of the system.

Emotional twists were significant in speeches we researched, we developed e-spiral so these shift could be compared.

Are there patterns in speeches related to their success? How do factors such as speech rhythm, vocabulary, and various emotional metrics impact performance across nationalities?

As we found significant relationships between factors, we wondered how best to support speakers to find patterns on their own. How could data be shown in a way that people can readily identify?

E-script directly shows emotion, word speed, pause and pace though typography.

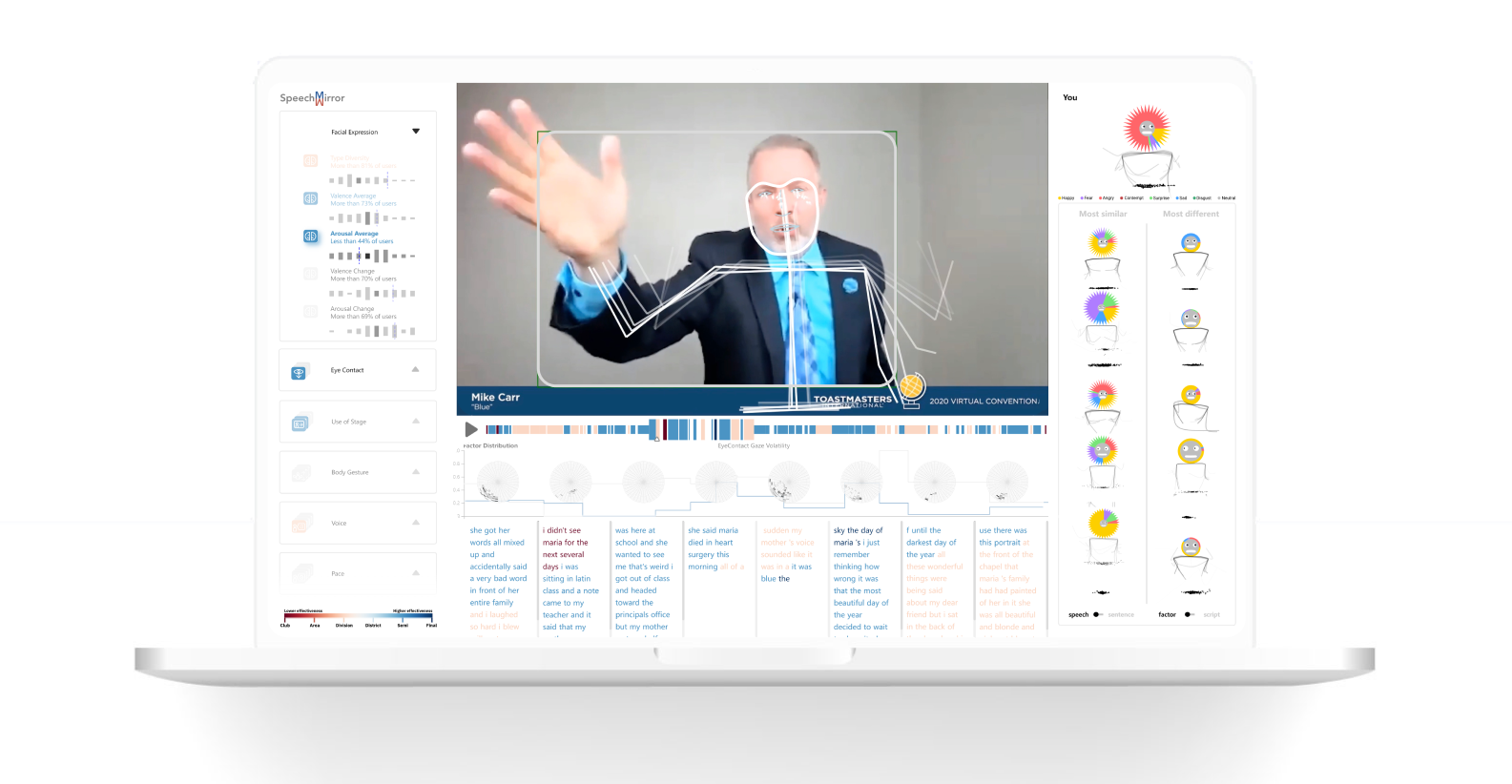

In the next stage in our research, we went beyond testing theories to aiding speakers to understand how their speech differs from other contestants.

For online speeches, what are the most similar and different speeches in terms of emotion, gestures, eye contact, stage movement, and other factors. Could we learn from speakers with different expressive patterns?

So far collaboration has resulted in two patents (and two more pending), project funding expansion, and publication in the top visualization journal TVCG and conference IEEE VIS. As technology advances and more accurate methods of quantifying information about speeches are developed, new ways of delivering insights can improve the many goals of speakers with sales targets, entertainment objectives, and persuasive intents.

The founder of Infodesign Studio began to collect contest speeches, wondering if large scale analysis can bring insights. The speeches were processed using affective computing methods.

At the start of the project small scale analysis showed patterns between the emotion of a speaker and contest ranking, inspiring further research.

The first system allows speakers to test theories about what factors go into speech effectiveness. Infodesign Studio worked on creating analysis metrics, creating the requirements of the system, visualizations, and the design of the system.

As we found significant relationships between factors, we wondered how best to support speakers to find patterns on their own. How could data be shown in a way that people can readily identify?

Emotional twists were significant in speeches we researched, we developed e-spiral so these shift could be compared.

E-script directly shows emotion, word speed, pause and pace though typography.

Are there patterns in speeches related to their success? How do factors such as speech rhythm, vocabulary, and various emotional metrics impact performance across nationalities?

In the next stage in our research, we went beyond testing theories to aiding speakers to understand how their speech differs from other contestants.

For online speeches, what are the most similar and different speeches in terms of emotion, gestures, eye contact, stage movement, and other factors. Could we learn from speakers with different expressive patterns?

As technology advances and more accurate methods of quantifying information about speeches are developed, new ways of delivering insights can improve the many goals of speakers with sales targets, entertainment objectives, and persuasive intents.